With the increasing amount of artificial intelligence permeating the public media sphere, there is also a growing number of concerns about the ethical implications of these technologies. From data centers using massive amounts of energy and water, to private information being collected and used to train AI models, the ethical concerns around AI are plentiful, but also seem abstract.

The Public Value Benchmarking project addresses these concerns, by making public values like “transparency”, “sustainability”, “inclusivity”, and “privacy” visible, discussable, and comparable in relation to AI applications.

As part of this work, an interactive installation was exhibited in the main hall at the Society 5.0 festival at The Social Hub in Amsterdam in October.

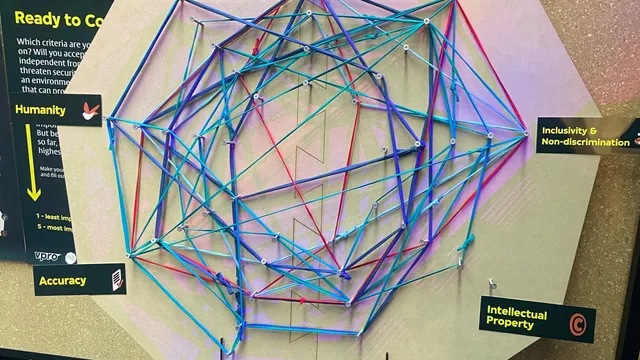

Here, visitors were asked to actively consider which ethical criteria they prioritise when choosing an AI tool, by placing a string on a polygon, creating a spider diagram. The strings had a fixed length, making it impossible to give every criteria the highest score, thus provoking questions like: Will you accept a privacy risk, if the AI is independent from tech companies that threaten security of supply? Will you tolerate an environmentally damaging technology that can provide results with high accuracy?

The physical limitation of the strings opened up a lot of interesting discussions about the inevitable ethical trade-offs the development of new technology brings. A few interesting points were:

- Many visitors were quick to sacrifice environmental impact, in favour of themes like privacy and humanity

- Visitors do generally not prioritise the protection of intellectual property when choosing an AI tool

- Many feel that ethical AI is not possible due to the market dominance of big tech companies.

The installation confirmed the need for tangible objects and frameworks that enables the discussion of AI ethics in media. A main outcome of the Public Value Benchmarking project is a comprehensive assessment framework that can be used to create an ethical profile of any given AI tool.

The AI tool under assessment is given a score on nine different public values, based on carefully selected benchmarks and units of measurement. For example, the value “privacy” is measured by GDPR compliance and principles of privacy by design. The assessment ultimately results in a visual ethical risk-profile diagram for the given AI tool.

The AI tool does not fail or pass the test. Simply, the framework helps highlight the strengths and weaknesses of the tool, to enable more informed decision making.

The next steps in the project is refining this framework, and testing it on real AI tools. The results of the assessment can then be activated in discussions about which AI tools should be used in public media projects.